Most of the ticketing systems by default use either MySQL or PostgreSQL and often stores the date-time of the DML events in Bigint format. When such tables data moved to SQL Server, the bigint value should be converted into date and time for readability and analysis purposes.

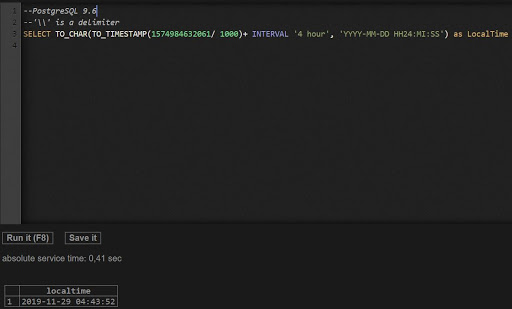

This is not new, however, this article will help you in converting the bigint value to readable UTC (Coordinated Universal Time) and local time in both SQL Server as well as PostgreSQL.

The below script works in SQL Server and converts the big integer value to UTC and local times.

DECLARE @BigIntVal BIGINT

SELECT @BigIntVal = 1574984632061

SELECT DATEADD(hh, +4, DATEADD(s, CONVERT(BIGINT, @BigIntVal) / 1000, CONVERT(DATETIME, '1-1-1970 00:00:00')))

DECLARE @BigIntVal BIGINT

SELECT @BigIntVal = 1574984632061

SELECT DATEADD(s, CONVERT(BIGINT, @BigIntVal) / 1000, CONVERT(DATETIME, '1-1-1970 00:00:00'))