Sometimes the data stores in the table in a different format in comparison to the real data type it belongs to. The date might be stored as an integer value or a string value, numerical values might be stored as a character string, the currency might be stored in number format. The reason or explanation could be whatever requested by the design or business. One cannot expect the same as an output, there we need casting, parsing, and conversion methods.

This article focuses on the following functions -

1) CAST

2) CONVERT

3) PARSE

4) TRY_PARSE

5) TRY_CAST

6) TRY_CONVERT

CAST:

Cast function converts an expression from one data type to another. This is ANSI-SQL specification.

Examples:

SELECT CAST(25.65 AS INT);

The query returns the value of 25.65 as 25 by converting the 'decimal' into an integer.

SELECT CAST(25.65 AS VARCHAR);

The query returns the value of 25.65 as 25.65 but the later one is a character string.

SELECT CAST('2017-08-25' AS DATETIME);

The query returns the value of "2017-08-25" as "2017-08-25 00:00:00.000" by converting the 'decimal' values into an integer.

Let's see one more example.

USE AdventureWorks2017;

GO

SELECT Name,

sellstartdate,

CAST(sellstartdate AS VARCHAR) converteddate,

ListPrice

FROM Production.Product

WHERE CAST(ListPrice AS int) LIKE '3%';

GO

CONVERT:

Examples:

SELECT CONVERT (INT, 25.65)

The query returns "25" by converting the 'decimal' into an integer.

SELECT CONVERT(DATETIME, '2017-08-25')

The query returns "2017-08-25 00:00:00.000" a proper DateTime format.

SELECT CONVERT(VARCHAR(10), GETDATE(), 101)

Let's see one more example.

USE AdventureWorks2017;

GO

SELECT Name,

sellstartdate,

CONVERT(VARCHAR(10),sellstartdate,101) converteddate,

ListPrice

FROM Production.Product

WHERE CONVERT(INT, ListPrice) LIKE '3%';

GO

Please click here for more information about the "CONVERT" function.

PARSE:

Returns

the result of an expression translated to the requested data type in SQL

Server. This function is not an alternative to CAST or CONVERT function though it converts the data type one to another.

Let's see this date - "Wednesday, 2 September 2020". If you see there isn't any issue with this date or its format as it's human-readable and we know that in some places people use such date format. However, built-in functions like 'CAST' or 'CONVERT' cannot convert such data that people of different timezones or different geo-locations used.

Let us test.

SELECT CAST('Wednesday, 2 September 2020' AS datetime)

SELECT CAST('Wednesday, 2 September 2020' AS datetime2)

SELECT CONVERT(DATETIME,'Wednesday, 2 September 2020')

SELECT CONVERT(DATETIME,'Wednesday,

2 September 2020')

All the above statements return 'conversion failed' error since CAST or CONVERT cannot convert such data into other formats.

SELECT PARSE('Wednesday, 2 September 2020' AS datetime)

SELECT PARSE('Wednesday, 2 September 2020' AS datetime2)

SELECT PARSE('Wednesday, 2 September 2020' AS datetime2 USING 'en-US');

The above three statements return the converted data as shown below.

Let's see one more example.

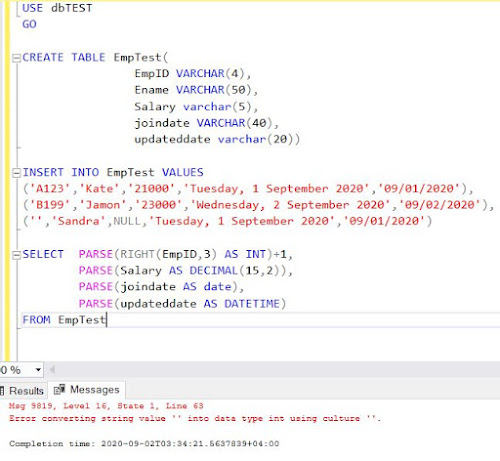

Above is a test data. EmployeeID usually is an integer value however intensionally it was assigned as VARCHAR. The same with Salary, "joindate" and "updatedate".

In the SELECT statement, +1 (plus one) at the end of the first parse statement is to check if it is convertible to a numeric value. If the outcome is not a number then addition will return an error.

PARSE will not proceed further and throws an error if there is any null value.

If the PARSE is used in a transaction and if there is any null, the whole transaction will be rolled back. This led to the introduction of TRY_PARSE.

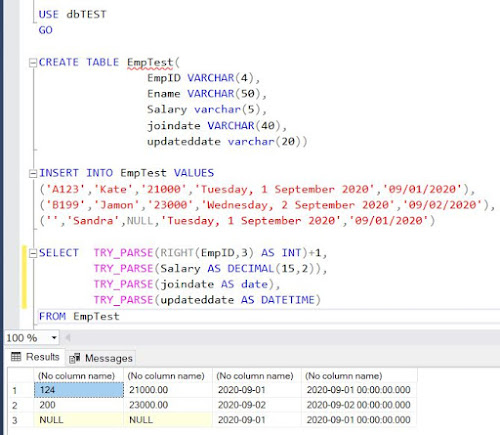

TRY_PARSE:

TRY_PARSE function is as same as PARSE; the only difference is it will return a null value when the conversion fails instead of canceling the whole conversion process.

USE dbTEST

GO

CREATE TABLE EmpTest(

EmpID

VARCHAR(4),

Ename

VARCHAR(50),

Salary

varchar(5),

joindate

VARCHAR(40),

updateddate

varchar(20))

INSERT INTO EmpTest VALUES

('A123','Kate','21000','Tuesday, 1 September 2020','09/01/2020'),

('B199','Jamon','23000','Wednesday, 2 September 2020','09/02/2020'),

('','Sandra',NULL,'Tuesday, 1

September 2020','09/01/2020')

SELECT TRY_PARSE(RIGHT(EmpID,3) AS INT)+1,

TRY_PARSE(Salary AS DECIMAL(15,2)),

TRY_PARSE(joindate AS date),

TRY_PARSE(updateddate AS DATETIME)

FROM EmpTest

Similarly, there are two more functions i.e. TRY_CONVERT and TRY_CAST. These two will also return a null value when the conversion fails, instead of cancelling the transaction and returning an error.

TRY_CONVERT:

SELECT TRY_CONVERT(INT, RIGHT(EmpID,3)),

TRY_CONVERT(DECIMAL(15,2), Salary),

TRY_CONVERT(DATETIME, updateddate)

FROM EmpTest

TRY_CAST:

SELECT TRY_CAST(RIGHT(EmpID,3) AS INT),

TRY_CAST(Salary AS DECIMAL(15,2)),

TRY_CAST(updateddate AS DATETIME)

FROM EmpTest