Commands are non-SQL statements such as setting a property or adding a resource. They can be used in HiveQL scripts or directly in the CLI or Beeline. The below commands are mostly used ones and are helpful while working with partitions, adding external jar files, and changing the configuration settings.

Tuesday, September 29, 2020

Commonly used Apache Hive non-SQL Statements

HDFS Basic Commands

This article will explore some Hadoop basic commands that help in our day-to-day activities.

Hadoop file system shell commands are organized in a similar way to Unix/Linux environments. For people who work with Unix shell, it is easy to turn to Hadoop shell commands. Such commands communicate with HDFS and other Hadoop-supported file systems.

1) List-out the contents of the directory.

2) Create or delete a directory

To check the status of safemode

hadoop dfsadmin -safemode get

To change the safemode to ON

hadoop dfsadmin -safemode enter

To change the safemode to OFF / or to

leave the safemode

hadoop fs -put <sourcefilepath> <destinationfilepath>

Examples:

hadoop fs -put Desktop/Documents/emp.txt /user/cloudera/empdir

hadoop fs -copyFromLocal Desktop/Documents/emp.txt /user/cloudera/emp.txt

To know more about "copyFromLocal", "put" "copyToLocal" and "get", please click here.

4) Read the file

hadoop fs -cat /user/cloudera/emp.txt

The above command helps in reading the file however, one has to avoid using this command for large files since it can impact on I/O. This command is good for

files with small data.

5) Copy the file from HDFS to Local

System

This is reverse scenario of Put & CopyFromLocal. For more information click here.

6) Move the file from one HDFS location

to another (HDFS location)

Hadoop fs -mv emp.txt testDir

Hadoop fs -mv testDir tesDir2

Hadoop fs -mv testDir2/testDir /user/cloudera

7) Admin Commands

To view the config settings

go

to --> computer-browse folder-filesystem-->etc-->hadoop-->conf-->hdfs-site.xml

To

change the default configuration values such as dfs.replication or dfs.blocksize from hdfs-site.xml, use the sudo commands

Click "I" for insert option or to bring it in edit mode.

Modify the values as per your

requirement.

To save and exit :wq!

hadoop fs -tail [-f]

<file>

The Hadoop fs shell tail command shows the last 1KB of a file on console or stdout.

File exists error in HDFS - CopyFromLocal

HDFS is a distributed file system designed to run on top of the local file system. Many times we may need to copy files from different sources i.e. from the internet, remote network, or from the local file system. There are "CopyFromLocal" and "Put" commands to help us in performing the task. While copying a file from the local file system to HDFS, if the file exists in the destination, the execution will fail and we will receive 'the file exists' error.

Let's assume the file "emp.txt" already exists in the path /user/cloudera.

Hadoop fs -put Desktop/emp.txt /user/cloudera/emp.txt

Hadoop fs -copyFromLocal Desktop/emp.txt /user/cloudera/emp.txt

Hadoop fs -copyFromLocal -f Desktop/Documents/emp.txt /user/cloudera/emp.txt

This is succeeded. The file is copied to the destination without any errors.

The usage of the "-f" option with -copyFromLocal will overwrite the destination if it already exists.

Monday, September 28, 2020

Difference between CopyFromLocal, Put, CopyToLocal and Get

hadoop fs -copyFromLocal <Local system directory path> <HDFS file path>

A choice exists to overwrite an existing file using -f when using copyFromLocal. However, an error is returned if the file persists when "put" is executed.

In short, anything you do with copyFromLocal, you can do with "put", but not vice-versa.

CopyToLocal and Get:

These two commands are just opposite to "CopyFromLocal" and "Put".

The destination is restricted to a local file reference when we use copyToLocal. While using "Get" there are no such restrictions.

Anything you do with copyToLocal, you can do with "get" but not vice-versa.

hadoop fs -get <HDFS file path> <Local system directory path>

hadoop fs -copyToLocal <HDFS file path> <Local system directory path>

For complete HDFS commands please click here. For complete Hive DDL commands please click here.

An alternative to ISNULL() and NVL() functions in Hive

The NVL() function enables you to substitute null for a more relevant alternative in the query results. This function accepts two arguments. If the first argument is null, then it returns the second argument. If the first argument is not null, it returns the first one and will ignore the second argument. This function is available in Oracle SQL*Plus, but not in MySQL, SQL Server, and Hive.

However, as an alternative, ISNULL() and COALESCE() functions can be used to achieve the same result in MySQL and SQL Server. Since ISNULL() is not available in Hive, COALESCE() function is the only option to achieve the desired output.

The difference between NVL() and COALESCE() is that COALESCE() will return the first non-null value from the list of expressions while NVL() only takes two parameters and returns the first if it is not null, otherwise, returns the second.

Let's see what these three functions will do.

Oracle:

SELECT first_name + middle_name + last_name As EmpName

FROM Employees;

Result:

Employees

---------------------------

Robert Finn Hill

Bruce M. Wills

Maria Andrew Brown

NULL

The last row is null because there is no middle name of the employee. NULL is returned when concatenated the null with first-name and last-name. There we use NVL() function.

SELECT first_name + NVL(middle_name, ' ') + last_name As EmpName

FROM Employees;

Result:

Employees

----------------------------

Robert Finn Hill

Bruce M. Wills

Maria Andrew Brown

Ashley Miller

SQL Server:

SELECT first_name + ISNULL(middle_name,'') + last_name As EmpName

FROM Employees;

SELECT first_name + COALESCE(middle_name,'') + last_name As EmpName

FROM Employees;

Hive:

SELECT first_name + COALESCE(middle_name,'') + last_name As EmpName

FROM Employees;

Hive Internal vs External Tables

- the data is being used outside the Hive. The data files are read and interpreted by an existing program that does not lock the files, for instance.

- data needs to stay in the underlying position even after a DROP TABLE. In other words, the data file always stays on the HDFS server even if you delete an external table. This also means that Metadata is maintained on the master node, and deleting an external table from HIVE only deletes the metadata not the data/file.

- you choose a custom place to be used, use external tables.

- Hive doesn't own the data.

- you are not creating a table based on an existing table (AS SELECT), use external tables.

- you are okay with the fact that External table files are accessible to anyone who has access to HDFS. Security needs to be handled at the HDFS folder level.

Create "Internal" tables when:

- the data is temporary.

- you want Hive to completely manage the lifecycle of the table and data.

- you want the data and metadata to be stored inside Hive's warehouse.

- You are okay with the fact that table deletion would also erase the master-node and HDFS metadata and actual data, respectively.

- you want the security of the data to be controlled solely via HIVE.

Conclusion:

In "Internal" tables, the table is created first and data is loaded later.

In "External" tables, the data is already present in HDFS and the table is created on top of it.

Big Data: Apache Hive & Impala Data Types Quick Reference

Range: -128 to 127

single precision floating point number

double precision floating point number

Timestamps were introduced in Hive 0.8.0. It supports traditional UNIX timestamp with the optional nanosecond precision.

The supported Timestamps format is yyyy-mm-dd hh:mm:ss[.f…].

TOP, LIMIT, ROWNUM vs DENSE_RANK

In SQL Server, using a TOP clause with a specified number of records with descending order of price?

In MySQL and Impala, using a LIMIT clause with a specified number of records with descending order of price?

Let's create some sample data and do some exercises to understand the scenario.

The following statement will create a "Products" table:

CREATE TABLE Products

(

ProductName STRING,

Price DECIMAL(7,2)

);

('Galaxy Chocolates',20),

('Kitkat Chocolates',22),

('Rainbow Chocolates',19),

('Americana Chocobread',26),

('Palm Milky Chocobars',28),

('Bounty chocolates',26),

(Sparkles chocos',23),

('Smiley Cocos',21),

('DelightPlus chocos',22),

('Softy chocobar',18),

('Minis chocos',8)

Now we have "Products" table with data.

Let's query against the table to retrieve "Products" data based on the descending order of "Price"

SELECT ProductName, Price FROM Products

ORDER BY Price DESC;

Now, let us retrieve the top ten product information based on the highest price using LIMIT clause.

SELECT ProductName, Price FROM Products

ORDER BY Price DESC

LIMIT 10;

This returned ten rows however by looking at the data we can say it is not giving the information what we are looking for. i.e. the top-ten product information. There are some products that have the same price hence it will be considered only Top-8 products.

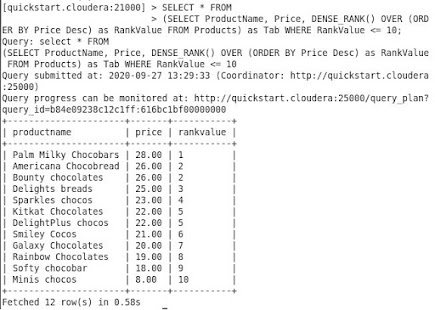

In this scenario, we need to use DENSE_RANK to fetch the ranking of the products based on their price.

SELECT ProductName, Price, DENSE_RANK() OVER (ORDER BY Price DESC)

AS RankValue FROM Products)

AS Tab

WHERE RankValue <= 10;

Hope you find this article helpful.

Sunday, September 27, 2020

Sqoop Complete Tutorial Part-7

This is the continuation part of "Sqoop Complete Tutorial". If you want to read -

18) Importing all tables from MySQL to Hive

Importing a table from MySQL to Hive's default database. The below command will help in copying all the tables from MySQL to Hive user database.

sqoop import-all-tables

--connect jdbc:mysql://localhost/empdept

--username root

--password cloudera

--hive-import

--hive-database dbTest

If you look at the result, the data is replicated. This is because the tables "emp" and "dept" already exists in the database. hive-overwrite will help in replacing the data if already exist.

sqoop import-all-tables

--connect jdbc:mysql://localhost/empdept

--username root

--password cloudera

--hive-import

--warehouse-dir /user/hive/warehouse/dbtest

--hive-database dbtest

--hive-overwrite

19) Importing all tables but excluding few from MySQL to Hive

I have created a table named "location" in my current database 'empdept'.

I am about to import all tables but excluding 'emp' and 'dept' since those were already imported. Since "location" is the only table to import, I can specify the table name, however, let's see how it can be done with sqoop-import-all.

--connect jdbc:mysql://localhost/empdept

--username root

--password cloudera

--hive-import

--hive-database dbtest

--exclude-tables "emp,dept"

Sqoop Complete Tutorial Part-6

This is the continuation part of "Sqoop Complete Tutorial". If you want to read -

16) Importing a table from MySQL to Hive's default database.

Importing a table from MySQL to Hive's default database. The below command will help in copying "emp" table and data from MySQL to Hive "default" database as "employee".

sqoop import

--connect jdbc:mysql://localhost/empdept

--table emp

--username root

--password cloudera

--hive-import

--hive-table employees

17) Importing a table from MySQL to Hive's user database.

sqoop import

--connect jdbc:mysql://localhost/empdept

--table emp

--username root

--password cloudera

--hive-import

--hive-table employees

--hive-database dbTest

Verifying the data:

Please click here for the next part.

Big Data & SQL

Hi Everybody, Please do visit my new blog that has much more information about Big Data and SQL. The site covers big data and almost all the...

-

The CONCAT() function joins the input strings or different column values and returns as a single string. However, if we need to concatenate ...

-

There is a ‘ CharIndex’ function in SQL Server which is similar to the Oracle ‘ Instr’ function. In Oracle, the syntax of the INSTR functi...

-

Either SQL Developers or Data Analysts often use arithmetic operators in their queries such as =, >, <, != Apart from this, LIKE, EX...