In SQL Server, using a TOP clause with a specified number of records with descending order of price?

In MySQL and Impala, using a LIMIT clause with a specified number of records with descending order of price?

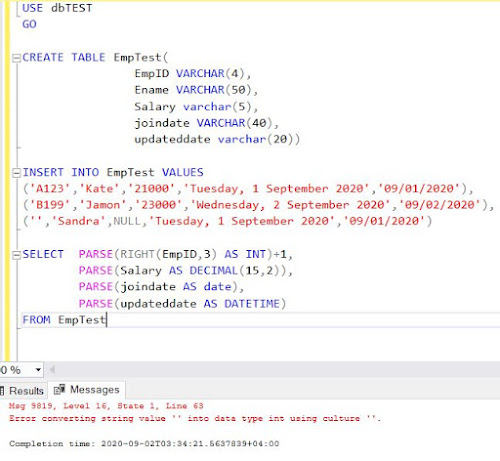

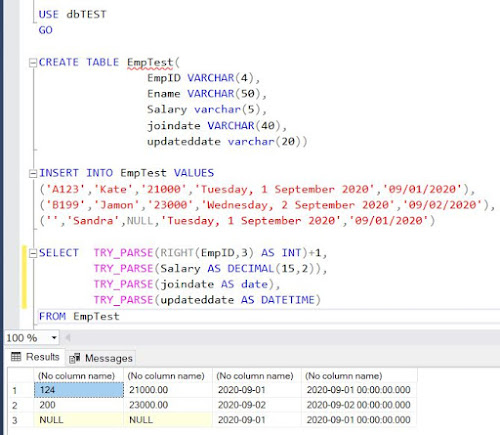

Let's create some sample data and do some exercises to understand the scenario.

The following statement will create a "Products" table:

CREATE TABLE Products

(

ProductName STRING,

Price DECIMAL(7,2)

);

('Galaxy Chocolates',20),

('Kitkat Chocolates',22),

('Rainbow Chocolates',19),

('Americana Chocobread',26),

('Palm Milky Chocobars',28),

('Bounty chocolates',26),

(Sparkles chocos',23),

('Smiley Cocos',21),

('DelightPlus chocos',22),

('Softy chocobar',18),

('Minis chocos',8)

Now we have "Products" table with data.

Let's query against the table to retrieve "Products" data based on the descending order of "Price"

SELECT ProductName, Price FROM Products

ORDER BY Price DESC;

Now, let us retrieve the top ten product information based on the highest price using LIMIT clause.

SELECT ProductName, Price FROM Products

ORDER BY Price DESC

LIMIT 10;

This returned ten rows however by looking at the data we can say it is not giving the information what we are looking for. i.e. the top-ten product information. There are some products that have the same price hence it will be considered only Top-8 products.

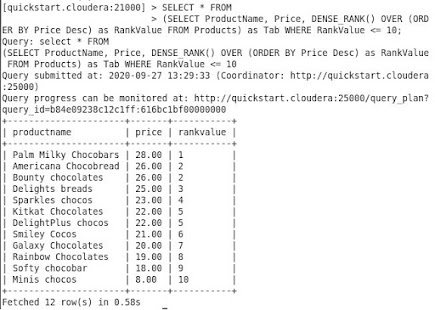

In this scenario, we need to use DENSE_RANK to fetch the ranking of the products based on their price.

SELECT ProductName, Price, DENSE_RANK() OVER (ORDER BY Price DESC)

AS RankValue FROM Products)

AS Tab

WHERE RankValue <= 10;

Hope you find this article helpful.